NOTE: Most of the text from this post can be found here. I think that post is excellent. But I also wanted to modify and expand it. Hence this post.

How does the Internet work? Suppose you curl www.google.com. What happens? Before we talk about the steps in sending a message over the Internet, we will talk about protocols, packets, routers, and NAT’s.

The Internet works through a packet routing network in accordance with the Internet Protocol (IP), the Transport Control Protocol (TCP) and other protocols.

What’s a protocol?

A protocol is a set of rules specifying how computers should communicate with each other over a network. For example, the Transport Control Protocol has a rule that if one computer sends data to another computer, the destination computer should let the source computer know if any data was missing so the source computer can re-send it. Or the Internet Protocol which specifies how computers should route information to other computers by attaching addresses onto the data it sends.

What’s a packet?

Data sent across the Internet is called a message. Before a message is sent, it is first split in many fragments called packets. These packets are sent independently of each other. The typical maximum packet size is between 1000 and 3000 characters. The Internet Protocol specifies how messages should be packetized.

What’s a packet routing network?

It is a network that routes packets from a source computer to a destination computer. The Internet is made up of a massive network of specialized computers called routers. Each router’s job is to know how to move packets along from their source to their destination. A packet will have moved through multiple routers during its journey.

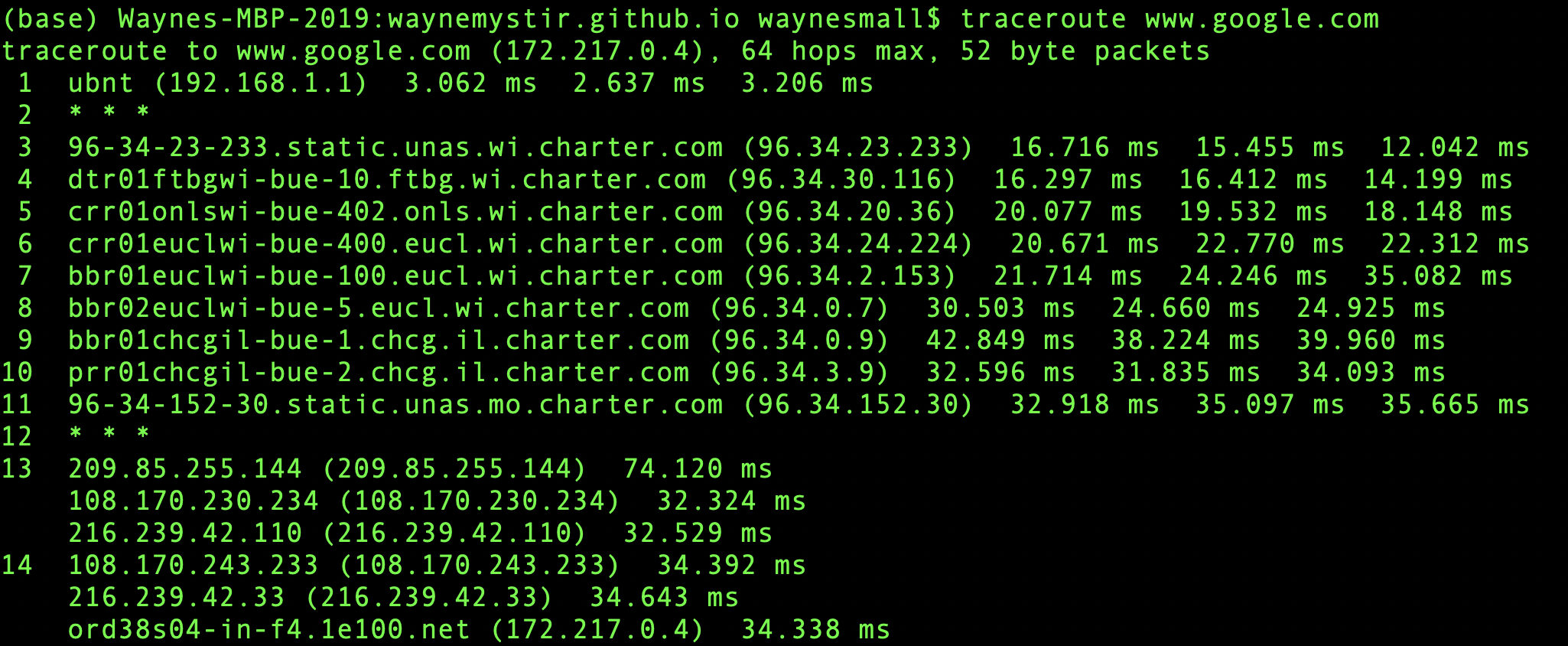

When a packet moves from one router to the next, it’s called a hop. You can use the command line-tool traceroute to see the list of hops that packets take between you and a host.

The Internet Protocol specifies how network addresses should be attached to the packet’s headers, a designated space in the packet containing its meta-data. The Internet Protocol also specifies how the routers should forward the packets based on the address in the header.

Where did these Internet routers come from? Who owns them?

These routers originated in the 1960s as ARPANET, a military project whose goal was a computer network that was decentralized so the government could access and distribute information in the case of a catastrophic event. Since then, a number of Internet Service Providers (ISP) corporations have added routers onto these ARPANET routers.

There is no single owner of these Internet routers, but rather multiple owners: The government agencies and universities associated with ARPANET in the early days and ISP corporations like AT&T and Verizon later on. Asking who owns the Internet is like asking who owns all the telephone lines. No one entity owns them all; many different entities own parts of them.

Do the packets always arrive in order? If not, how is the message re-assembled?

The packets may arrive at their destination out of order. This happens when a later packet finds a quicker path to the destination than an earlier one. But packet’s header contains information about the packet’s order relative to the entire message. The Transport Control Protocol uses this info for reconstructing the message at the destination.

Do packets always make it to their destination?

The Internet Protocol makes no guarantee that packets will always arrive at their destinations. When that happens, it’s called a packet loss. This typically happens when a router receives more packets than it can process. It has no option other than to drop some packets.

However, the Transport Control Protocol handles packet loss by performing re-transmissions. It does this by having the destination computer periodically send acknowledgement packets back to the source computer indicating how much of the message it has received and reconstructed. If the destination computer finds there are missing packets, it sends a request to the source computer asking it to resend the missing packets. When two computers are communicating through the Transport Control Protocol, we say there is a TCP connection between them.

What do these Internet addresses look like?

These addresses are called IP addresses and there are two standards.

The first address standard is called IPv4 and it looks like 212.78.1.25. IPv4 only supports $2^{32}$ (about 4 billion) possible addresses and there are currently about 8+ billion networked devices on the Internet.

So the Internet Task Force proposed a new address standard called IPv6. This address looks like 3ffe:1893:3452:4:345:f345:f345:42fc and supports $2^{128}$ possible addresses. This provides many more possible addresses than the number of networked devices.

There is a one-to-one mapping between IPv4 addresses and a subset of the IPv6 addresses. Note that the switch from IPv4 to IPv6 is still in progress and will take a long time. As of 2014, Google revealed that their IPv6 traffic was only at 3%.

How can there be over 8 billion networked devices on the Internet if there are only about 4 billion IPv4 addresses?

It’s because there are public and private IP addresses. Multiple devices on a local network connected to the Internet will share the same public IP address. Within the local network, these devices are differentiated from each other by private IP addresses, typically of the form 192.168.xx or 172.16.x.x or 10.x.x.x where x is a number between 1 and 255. These private IP addresses are assigned by Dynamic Host Configuration Protocol (DHCP).

For example, if a laptop and a smart phone on the same local network both make a request to www.google.com, before the packets leave the modem (or local router), it modifies the packet headers and assigns one of its ports to that packet. When the google server responds to the requests, it sends data back to the modem at this specific port, so the modem will know whether to route the packets to the laptop or the smart phone.

In this sense, IP addresses aren’t specific to a computer, but more the connection which the computer connects to the Internet with. The address that is unique to your computer is the MAC address, which never changes throughout the life of the computer.

This protocol of mapping private IP addresses to public IP addresses is called the Network Address Translation (NAT) protocol. It’s what makes it possible to support 8+ billion networked devices with only 4 billion possible IPv4 addresses.

Router

How does a router know where to send a packet? Does it need to know where all the IP addresses are on the Internet? The short answer is that the router knows through routing protocols where this IP exists. The longer answer:

Every router does not need to know where every IP address is. It only needs to know which one of its neighbors, called an outbound link, to route each packet to. Note that IP Addresses can be broken down into two parts, a network prefix and a host identifier. For example, 129.42.13.69 can be broken down into

Network Prefix: 129.42

Host Identifier: 13.69

All networked devices that connect to the Internet through a single connection (ie. college campus, a business, or ISP in metro area) will all share the same network prefix. Routers will send all packets of the form 129.42.. to the same location. So instead of keeping track of billions of IP addresses, routers only need to keep track of less than a million network prefix.

Routing Table

But a router still needs to know a lot of network prefixes. If a new router is added to the Internet, how does it know how to handle packets for all these network prefixes?

A new router may come with a few preconfigured routes. But if it encounters a packet it does not know how to route, it queries one of its neighboring routers. If the neighbor knows how to route the packet, it sends that info back to the requesting router. The requesting router will save this info for future use. In this way, a new router builds up its own routing table, a database of network prefixes to outbound links. If the neighboring router does not know, it queries its neighbors and so on.

First Step in sending Internet message: Resolve the IP address

First our computer must look up the IP address corresponding to the domain name www.google.com. We call this process “resolving the IP address”. Computers resolve IP addresses through the Domain Name System (DNS). This is a decentralized database of mappings from domain names to IP addresses.

To resolve an IP address, the computer first checks its local DNS cache, which is a dictionary of web sites (the key) and IP addresses (the value) it has visited recently. If it cannot resolve the IP address there or if that IP address record has expired, then it queries the ISP’s DNS servers. These servers are dedicated to resolving IP addresses. If the ISP’s DNS servers can’t resolve the IP address, they query the root name servers, which can resolve every domain name for a given top-level domain. Top-level domains are the words to the right of the right-most period in a domain name. .com .net .org are some examples of top-level domains.

Once your computer has resolved the IP address, it starts sending the curl request to your modem or local router with this destination IP.

Second Step in sending Internet message: Send the packets to the NAT

Your local router functions as a mini-router and a NAT (and a Wifi connection point). Once it is given the message with the destination IP in the header, the NAT takes over as we described above.

Note that I just made up the term ‘mini-router’. This is not an official or common term. I just mean to say that your local router may act like an Internet router with a routing table but on a much smaller scale.

How do applications communicate over the Internet?

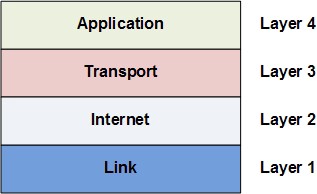

Like many other complex engineering projects, the Internet is broken down into smaller independent components, which work together through well-defined interfaces. These components are called the Internet Network Layers and they consist of Link Layer, Internet Layer, Transport Layer, and Application Layer. These are called layers because they are built on top of each other; each layer uses the capabilities of the layers beneath it without worrying about its implementation details.

Internet applications work at the Application Layer and don’t need to worry about the details in the underlying layers. For example, an application connects to another application on the network via TCP using a construct called a socket, which abstracts away the gritty details of routing packets and re-assembling packets into messages.

What do each of these Internet layers do?

At the lowest level is the Link Layer which is the “physical layer” of the Internet. The Link Layer is concerned with transmitting data bits through some physical medium like fiber-optic cables or wifi radio signals.

On top of the Link Layer is the Internet Layer. The Internet Layer is concerned with routing packets to their destinations. The Internet Protocol mentioned earlier lives in this layer (hence the same name). The Internet Protocol dynamically adjusts and reroutes packets based on network load or outages. Note it does not guarantee packets always make it to their destination, it just tries the best it can.

On top of the Internet Layer is the Transport Layer. This layer is to compensate for the fact that data can be loss in the Internet and Link layers below. The Transport Control Protocol mentioned earlier lives at this layer, and it works primarily to re-assembly packets into their original messages and also re-transmit packets that were loss.

The Application Layer sits on top. This layer uses all the layers below to handle the complex details of moving the packets across the Internet. It lets applications easily make connections with other applications on the Internet with simple abstractions like sockets. The HTTP protocol which specifies how web browsers and web servers should interact lives in the Application Layer. The IMAP protocol which specifies how email clients should retrieve email lives in the Application Layer. The FTP protocol which specifies a file-transferring protocol between file-downloading clients and file-hosting servers lives in the Application Layer.

What’s a client versus a server?

While clients and servers are both applications that communicate over the Internet, clients are “closer to the user” in that they are more user-facing applications like web browsers, email clients, or smart phone apps. Servers are applications running on a remote computer which the client communicates over the Internet when it needs to.

A more formal definition is that the application that initiates a TCP connection is the client, while the application that receives the TCP connection is the server.

How can sensitive data like credit cards be transmitted securely over the Internet?

In the early days of the Internet, it was enough to ensure that the network routers and links are in physically secure locations. But as the Internet grew in size, more routers meant more points of vulnerability. Furthermore, with the advent of wireless technologies like WiFi, hackers could intercept packets in the air; it was not enough to just ensure the network hardware was physically safe. The solution to this was encryption and authentication through SSL/TLS.

What is SSL/TLS?

SSL stands for Secured Sockets Layer. TLS stands for Transport Layer Security. SSL was first developed by Netscape in 1994 but a later more secure version was devised and renamed TLS. We will refer to them together as SSL/TLS.

SSL/TLS is an optional layer that sits between the Transport Layer and the Application Layer. It allows secure Internet communication of sensitive information through encryption and authentication.

Encryption means the client can request that the TCP connection to the server be encrypted. This means all messages sent between client and server will be encrypted before breaking it into packets. If hackers intercept these packets, they would not be able to reconstruct the original message. Authentication means the client can trust that the server is who it claims to be. This protects against man-in-the-middle attacks, which is when a malicious party intercepts the connection between client and server to eavesdrop and tamper with their communication.

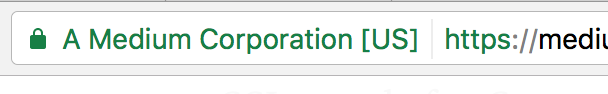

We see SSL in action whenever we visit SSL-enabled websites on modern browsers. When the browser requests a web site using the https protocol instead of http, it’s telling the web server it wants an SSL encrypted connection. If the web server supports SSL, a secure encrypted connection is made and we would see a lock icon next to the address bar on the browser.

The medium.com web server is SSL-enabled. The browser can connect to it over https to ensure that communication is encrypted. The browser is also confident it is communicating with a real medium.com server, and not a man-in-the-middle.

How does SSL authenticate the identity of a server and encrypt their communication?

It uses asymmetric encryption and SSL certificates.

Asymmetric encryption is an encryption scheme which uses a public key and a private key. These keys are basically just numbers derived from large primes. The private key is used to decrypt data and sign documents. The public key is used to encrypt data and verify signed documents. Unlike symmetric encryption, asymmetric encryption means the ability to encrypt does not automatically confer the ability to decrypt. It does this by using principles in a mathematical branch called number theory.

An SSL certificate is a digital document that consists of a public key assigned to a web server. These SSL certificates are issued to the server by certificate authorities. Operating systems, mobile devices, and browsers come with a database of some certificate authorities so it can verify SSL certificates.

When a client requests an SSL-encrypted connection with a server, the server sends back its SSL certificate. The client checks that the SSL certificate is issued to this server is signed by a trusted certificate authority has not expired. The client then uses the SSL certificate’s public key to encrypt a randomly generated temporary secret key and send it back to the server. Because the server has the corresponding private key, it can decrypt the client’s temporary secret key. Now both client and server know this temporary secret key, so they can both use it to symmetrically encrypt the messages they send to each other. They will discard this temporary secret key after their session is over.

What happens if a hacker intercepts an SSL-encrypted session?

Suppose a hacker intercepted every message sent between the client and the server. The hacker sees the SSL certificate the server sends as well as the client’s encrypted temporary secret key. But because the hacker doesn’t have the private key it can’t decrypt the temporarily secret key. And because it doesn’t have the temporary secret key, it can’t decrypt any of the messages between the client and server.

HTTP & REST

As mentioned above, HTTP resides in the Application Layer. Conceptually, HTTP takes a stateful operation and converts it into stateless resources. In effect, you’re pushing state to the client and the server only responds to a defined list of stateless functions: head, get, post, put, delete, etc. This paradigm reduces the complexity and workload on the server and slightly increases the workload on the client.

REST is a software architectural style that defines a set of constraints to be used for creating Web services. These constraints are:

- Client-Server: Separating the user interface concerns from the data storage concerns improves the portability of the user interfaces across multiple platforms. It also improves scalability by simplifying the server components. Perhaps most significant to the Web, however, is that the separation allows the components to evolve independently, thus supporting the Internet-scale requirement of multiple organizational domains.

- Stateless: the HTTP functions should be stateless on the server. The client-server communication is constrained by no client context being stored on the server between requests. Each request from any client contains all the information necessary to service the request, and the session state is held in the client.

- Uniform interface: This is fundamental to the design of any RESTful system.

- Resource identification in requests: Individual resources are identified in requests, for example using URIs in RESTful Web services. The URI should specify the precise resource being requested.

- Resource manipulation through representations: When a client holds a representation of a resource, it has enough information to modify or delete the resource.

- Cacheability: Clients and intermediaries can cache responses. Responses must therefore, implicitly or explicitly, define themselves as cacheable or not to prevent clients from getting stale or inappropriate data in response to further requests. Well-managed caching partially or completely eliminates some client-server interactions, further improving scalability and performance. Practically, this constraint is satisfied by the last-modified header field. If the HTTP method is GET and the client supplies a value (i.e. date) in the last-modified field, then the server will return nothing in the body if the requested item hasn’t been updated since that date. Otherwise it will return the requested item in the body. Hence the performance and scalability properties follow.

- Layered system: A client cannot ordinarily tell whether it is connected directly to the end server or to an intermediary along the way. So if a proxy or load balancer is placed between the client and server, it won’t affect their communications and there won’t be a need to update the client or server code. Intermediary servers can improve system scalability by enabling load balancing and by providing shared caches. Also, security can be added as a layer on top of the web services, and then clearly separate business logic from security logic. Adding security as a separate layer enforces security policies. Finally, it also means that a server can call multiple other servers to generate a response to the client.

- Code on demand (optional): Servers can temporarily extend or customize the functionality of a client by transferring executable code: for example, compiled components such as Java applets, or client-side scripts such as JavaScript.

These constraints lead to these properties:

- performance in component interactions

- scalability allowing the support of large numbers of components and interactions among components

- simplicity of a uniform interface

- modifiability of components to meet changing needs (even while the application is running)

- visibility of communication between components by service agents

- portability of components by moving program code with the data

- reliability in the resistance to failure at the system level in the presence of failures within components, connectors, or data

We note some other things about REST:

- The uniform interface constraint implies that the API routing (API Gateway on AWS) mappings (dictionary of URL’s to the HTTP functions) should following some prescribed mapping

- All the HTTP functions (except post) should by idempotent (i.e. no adverse side effect on the system despite repeated calls)

- Only three functions can change the system: put, post, and delete.

- When modifying the system: Post should be used when the server will decide the new resourses identifier. And put should be used when the client determines the resources identifier. And by identifer, we mean not just the GUID but the entire path (i.e. URI i.e. Uniform Resource Identifier).

- Review the HTTP status codes; they are important to how RESTful services work.

Summary

- The Internet started as ARPANET in the 1960s with the goal of a decentralized computer network.

- Physically, the Internet is a collection of computers moving bits to each other over wires, cables, and radio signals.

- Like many complex engineering projects, the Internet is broken up into various layers, each concerned with solving only a smaller problem. These layers connect to each other in well-defined interfaces.

- There are many protocols that define how the Internet and its applications should work at the different layers: HTTP, IMAP, SSH, TCP, UDP, IP, etc. In this sense, the Internet is as much a collection of rules for how computers and programs should behave as it is a physical network of computers.

- With the growth of the Internet, advent of WIFI, and e-commerce needs, SSL/TLS was developed to address security concerns.